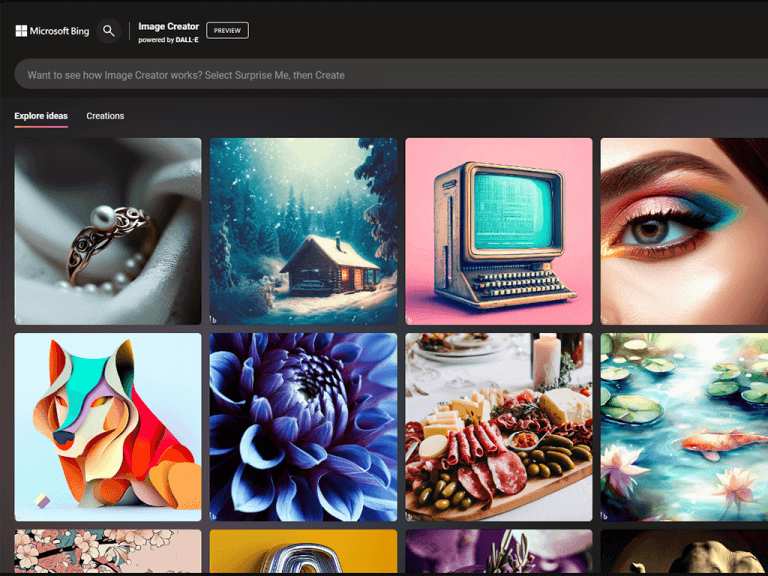

Microsoft’s Bing Image Creator, powered by OpenAI’s DALL-E 3 model, is currently experiencing issues with its content filtering system. Users have reported receiving content violation messages for seemingly innocuous text prompts, which has raised concerns about the service’s accuracy and overzealous content monitoring, as reported by Neowin.

This issue with content warnings isn’t entirely new. When Microsoft initially launched Bing Image Creator back in March, it had overly aggressive content filters in place. Even typing the word “Bing” would trigger a violation message, as mentioned by Mikhail Parakhin, the current head of Microsoft Windows.

In response to the recent reports of content warnings on seemingly benign text prompts, Microsoft has acknowledged the issue. Mikhail Parakhin’s response on X (formerly Twitter), where he mentioned, “Hmm. This is weird – checking,” indicates that they are aware of the problem and are likely investigating it to rectify the situation.

Hmm. This is weird – checking

— Mikhail Parakhin (@MParakhin) October 8, 2023

One frustrated user took to social media and tweeted, “Can hardly generate anything at all. Please fix the filtering.” This tweet reflects the frustration of many users who are finding their creative process hindered by the overly restrictive content filtering.

It’s important to note that AI-based content filtering systems can sometimes generate false positives, flagging content as problematic when it isn’t. It appears that this recent wave of content warnings may be due to an unintentional change in the system’s behavior.

Microsoft’s decision to increase GPU capacity in their data centers to address slowdowns in image generation shows their commitment to improving the service. It’s likely that they will also work to fine-tune and fix the content filtering system to ensure that users can create images without unnecessary content warnings in the future.