Microsoft releases open source artificial intelligence toolkit CNTK on GitHub

2 min. read

Published on

Read our disclosure page to find out how can you help Windows Report sustain the editorial team Read more

If you already used Cortana or Skype translator on your Windows devices, you may have wondered what does it take to make computers understand human speech. Well, Microsoft is betting big on deep learning and artificial intelligence, so its researchers chose to develop their own modern toolkit called Computational Network Toolkit (CNTK) , in hope of reaching more breakthroughs in the machine learning field.

Today the company is announcing that it’s just made CNTK available to use to other developers on Github. According to Microsoft researchers, thanks to better communication capabilities, this new toolkit is faster for speech and image recognition than the four other popular computational toolkits that other developers rely on. As deep learning activities can take weeks until completion, this achievement is a pretty big one for Microsoft.

To develop CNTK, Microsoft had to explore deep neural networks, which are systems that to replicate the biological processes of the human brain. The company is also relying on powerful computers with Graphics processing units (GPU) to run CNTK, as they are the best tools for processing the complicated algorithms that can improve artificial intelligence and speech and image recognition.

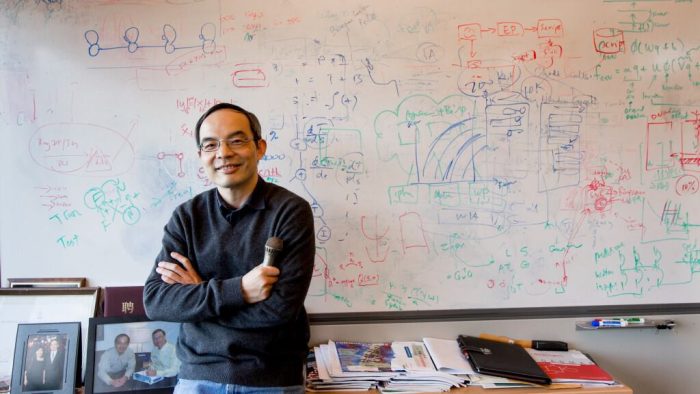

Xuedong Huang, Microsoft’s chief speech scientist, is proud to invite everyone to “join us to drive artificial intelligence breakthroughs,” with a toolkit “just insanely more efficient than anything we have ever seen.”

Because this tool now has an open-source license, it doesn’t matter if you’re a researcher with a small budget, a deep-learning startup or a bigger company who processes a lot of data: in fact, CNTK can be used on a single computer or can scale accross several GPU-based machines, allowing more flexibility for large-scale experiments. As artifical intelligence is generating a lot of interest in the technology field, I guess we can expect this new tool to soon attract interest from researchers and competitors in the deep learning field.